10-min read

I was recently assigned a technical project — to create a micro-service architecture and design the system from the ground up. After clearing the proposal and receiving approval from management, I thought it would be a good idea to post the results and keep adding to this document to further build on the insights I’ve gathered to improve the application. I love open-source solutions and Product Managers might also find this useful! Below are my findings.

Overview

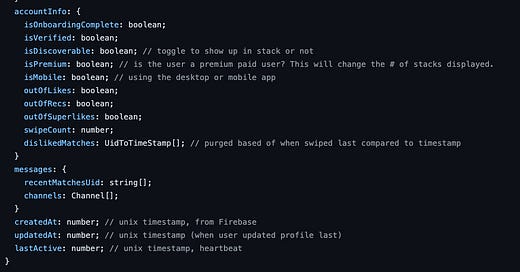

My understanding from the prompt is that a user is looking for a matchmaking service (in the form of a native application) that can connect people to different business owners based on goals, interests, and industries. I thought it would be best to take a step back and approach this from a high level by first designing the Entity-Relationship Diagram (ERD). I’ve linked a preliminary UserAccountClass here as a GitHub Gist and also attached an image below:

While designing the architecture, I primarily focused on the Objectives field, which contained what the user was looking to get from the app.

Are you looking for a job, mentorship, to mentor someone, or exchange industry knowledge, or other?

Building a user recommendation algorithm should start with the user, which is why I wanted to start scaffolding the UserProfile first to get an understanding of how everything might work and add additional fields based on needs and requirements.

Based on the supplied mockups and interface, I divided Objectives into these four categories: Coffee, Collab, Tutoring, and Jobs. This would form the bulk of the RecommendationEngine.

FRONTEND

I first needed to define the use cases and the most optimal infrastructure for the mobile application. Since I have previous experience in JavaScript, I thought it would be best to go with React Native (TypeScript) with a mix of firebase functions and hopefully a serverless backend. More on that later.

Using React Native (or Expo at the start) will achieve feature-parity across all mobile systems (iOS/ Android), limit the need for maintaining two codebases, while also having the added advantage of allowing for a future launch of the platform on PCs via React Native for Web.

For Market Research I looked at the onboarding process of Tinder:

They have their own login system and OAuth from several social media platforms. They also request your mobile number and the subsequent OTP for verification. Firebase has similar Authentication features, including one-time password phone verification.

I’d start by porting and converting over all the UI/ UX designs via Expo for quick iterations and rapid development, and then eject if and when native modules are required.

I’d use a combination of global classes/ themes while also introducing a highly componentized design to reduce redundancy. Each screen will be divided into separate components for reusability and modularity -- i.e, PrimaryButtonComponent for Call to Actions (CTAs) usable everywhere, UserCardComponents for use on the NetworkScreen and ChatItemComponent on the MessagesScreen. The Business Logic of the application (including but not limited to any calculations) will be completely separated from the designs and screens to allow for the future web launch.

The ChatScreen for each thread can be broken into the ChatBubble, which will grab the entire conversation and spread the props based on the senderUid (whether it’s a sent/ received message.) The Disconnect option in chat will purge the database of the entire conversation thread.

I’m not familiar with the Remarks option and how that might work but I’m imagining a pop-up modal that will allow a user to give feedback for a particular user. This can also be highly component-based. I’ve gone into more depth on the Report function in the Content Moderation part under the POTENTIAL FUTURE REQUIREMENTS section below.

Fuse.jscan assist with theSearchBarPillComponentin theMessagesScreenand as defined by theUserAccountit can either be filtered on a name, full-text conversation or Objectives basis.React Navigation to navigate the screens and Redux for State Management.

Updates can be sent over the air with CodePush and will allow for immediate bug fixes based on any errors reported by Sentry or AppsFlyer.

Dynamic User-related Notifications can also be pushed to the device with AppsFlyer.

Firebase Analytics/ Segment is a great way to visualize conversions and analyze user data.

I’d also use Hermes (for Android) to improve start-up time, decrease memory usage, and result in a smaller app size package.

For optimizations, you can either only add .webP images in-app or introduce a middleman bundler like Webpack to minify code pre-build. Heavy caching will be implemented (memoization) to reduce network overhead and unnecessary calls to the servers.

Internationalization via

react-native-localizemight help in a) providing translators a separate file to convert all text into whatever preferred language, and b) decoupling the business logic and screens from the actual generated text. Also, the more languages, the more global the app can become.Testing would be a combination of Jest/ React Native Testing Library/ Detox for components + E2E unit tests

BACKEND SERVICES

The Backend would have multiple Services and I’ve attempted to utilize a micro-service architecture to a) decouple any authentication mechanisms (regarding access) so there aren’t any clashes and b) create a single source of truth so the user can always go back to the previous state if they delete the application from their phone.

The three journeys would be something similar to this:

Client (New User) ->

UserCreationService -> dBClient -> Web Socket ->

RecommendationEngine -> sends the information on what Objectives a user requires (sends multiple micro shards, and makes a parallel call to each of these shards, and receives the stack of User Profiles for that particular day.)Client -> Web Socket ->

MatchingService-> ChatService

Each client request will be sent to a gateway and ProfileService to ensure the right person has access to the correct data. Every request will be preceded by a check on their access control.

Similar to how Monet, another newly formed dating app works, at the start we can match users globally and aren’t limited by country. This will form the basis for database optimizations on not just geolocational sharding but sharding by Objectives instead.

I’ve highlighted the necessary services and gone into more depth below.

RecommendationEngine:

Instead of Gender/ Age/ Location (which is what dating applications primarily use to form a recommendation), we can use Objectives, Job Title, and Location.

Pulls out all relevant people from the

ProfileService, or it could just be storing theuserIDsand theObjectives.To optimize for latency, we can shard the data by

Objectives, and later on by geographical location, once enough users have onboarded.To strike a perfect balance between low latency and cost $$$, one can host a single virtual server for every shard per cell. A cell can start as high-level categories taken from various freelancing websites (such as Photographer/ Graphic Designer/ Filmmaker, etc), and if and when required we can create subcategories to reduce latency. For example, there might be a huge number of Photographers, but a dearth of magicians, which means you’ll have to break up the number of photographers but can include all magicians in a single shard.

The shards can also be balanced based on the Unique User Count (in a particular location), active user count, and # of queries from a certain region.

By employing the use of a

TagManager/TagServicewe can modify the type of recommendations a user receives and make the system modular.TagManagerin this context would be the parameters you choose. For now, we can stick toObjectives.

RecommendationAlgorithm (part of the RecommendationService):

Active Usage: upon app open, updated

lastActivefield on UserProfile.Collect Tags: Location, Objectives, Job Title, Location, Experience, etc

Grouping Users: In its preliminary stage, we can start by matching users to their needs and requirements based on the provided Objectives, and as soon as we have sufficient data and the value-add of a particular user we can start creating groups of users. This essentially means the combination of people who like your profile as a whole, and the people you like who like you back. This will pave the way for an ELO matching system and will generate better recommendations for new users. An example of this would be graphic designers matching with like-minded graphic designers for the pure objective of collaboration.

Pickiness/ bad actors: Low (or egregiously high) swipe percentage will affect the quality of your matches and reduce your ELO.

Reply Rate: penalizes accounts that have a low reply rate percentage

Progressive Taxation: A high-value account (for instance a mentee with 10+ years of experience in the banking sector) will get taxed and normalized to make the playing field more ‘fair’ for other user profiles.

GROUP BY Stacks: and if a user swipes left to a particular stack show the other surrounding stack cells. For example, a user who is employed as a photographer looking for collaborations might also want to collaborate with Graphic Designers (instead of just photographers) since there is some overlap.

UserManagement/ ProfileService:

Each user will have its own record and any creations/ updates/ deletions will be managed by this service. This will include Authentication of each request and the creation of a token for

SessionManagement.There can be multiple authentication mechanisms, for example, an

EmailService, but for the sake of brevity, we can use Firebase.Firebase can provide the relevant access controls and assist in authenticating requests. In the backend, we can also do some redundancy checks. A

JWT tokenshould work.

SessionManagement:

To check and see if a user can interact with another user and that they’re active. Also links back to the RecommendationService since this serves as an important matching factor.

GatewayService:

Takes the request and asks the above service if the user is authenticated, and if resolves as true, forwards the request to the appropriate service.

BillingService:

Billing can be decoupled from

UserManagementand for accepting different currencies since the application will be global. A neat way to remove this dependency would be In-App Purchases (IAP) but both Apple and Android charge hefty service fees, and sending users to a website to pay would be a better alternative option.

MessageService:

Peer Protocol. XMPP can work here. Web Socket (Firebase) or TCP (building out a protocol of your own).

MatchingService:

A table that lists the users who have matched with whichever other users.

If a user A right swipes, both the IDs are sent to the

MatchingServiceand waits for the other user B to either swipe right or left to complete the relationship and allow for messaging.If user A left swipes on user B, user B’s profile is marked as hidden to the user and not shown in subsequent stacks.

Every time a swipe occurs, the

MatchingServicetable will check to see whether it’s a match or not. If it’s a match, the UID pair is purged from the database and marked as hidden for x amount of time for the user who swiped left. If the user right swipes the dB is checked to see if there are any existing pairs for both UIDs. If yes, they match, if no, a new record is created, and the profile is displayed in the awaiting profiles stack.Note: If a user uninstalls an application, the only information you’ll end up losing is the number of swipes a user has performed, whether they have liked or disliked a profile. They’re going to get recommendations of a disliked user.

NotificationService/ ChatManagement:

Web Sockets/ TCP to keep the connection open and push notifications to inform when a user receives a message, a message is undelivered, and the order and timestamp of each message in a particular thread.

ImageService/ ImageHandler:

Users can share images/ videos/ and other assets in chat.

File Systems are static so you can easily build a CDN over this. Allows fast access and with aggressive caching the overhead and network requests will be negligible.

Cheaper compared to storing Blobs/ Distributed File System (DFS).

I would prefer to decouple the Images from the

UserProfileand create an entirely different database that can, in the future, be used for machine learning and improved recommendations, or object detection. DFS + database with all the information for the particular images.

Monitoring: Prometheus for server performance, latency, uptime, and logging/ Sentry for in-app error reporting.

DATABASE

Based on the services required, these are some of the requirements from the database:

Low Latency: Fast Search of Profiles.

Doesn’t necessarily need to be real-time: a new user doesn’t instantly have to be added to the

RecommendationServicebut can be shown other profiles already in the database.Easy to Shard/ Distributed: for scalability

Full-Text Search Support: there are a lot of parameters to search for (the tags) that need to be fully supported

HTTP Interface: TCP/ Web Socket

Structured Date: xml/ json

ElasticSearch accomplishes all of these requirements.

Client -> elasticsearch Feeder -> Kafka or any Queue (index all the data asynchronously) ->

elasticsearch workers (which will request the TagService and what shard you need to write into)

Firebase/ Firestore can also achieve all of these and have inbuilt helper functions. For a real-world setup, you’ll want to configure security rules for Firestore. Cloud Firestore favors a denormalized data structure, so it’s okay to include senderId and senderName for each MessageItem. A denormalized data structure means you’ll duplicate a lot of data, but the upside is faster data retrieval. There are a lot of tradeoffs. From the mockup, I could see a user can add images and content to chat also. Rather than storing the image data directly with the message, you’ll use Firebase Storage, which is better suited to storing large files like audio, video, or images.

If you’d like to go a more homegrown route, NoSQL Databases like Cassandra, which is good at querying for these kinds of data types, you just replicate the data, and depending on the query you build an efficient table. Distributed Database + AmazonDynamoDB work here too.

Also, Redis — using transactions to make this process consistent.

If you’d like more control you can use a relational database. The same concepts apply, you’d just have to employ Sharding.

TECHNICAL DEBTS

Working on a project of this breadth and also safeguarding against any single point of failure (leveraging the Master-Slave architecture), will introduce more challenges and complexity. The project will need to be thoroughly tested to ensure feature-parity as well as cohesion between all systems, whether that is client-machine or machine-machine.

I can also imagine combining the older, more mature freelancing platform users with this new app will be somewhat of a challenge and introduce a lot of bandaid code which should be ironed out before launch.

Fortunately, using the error reporting tools both on the client and the server-side should potentially solve both of these issues.

ROADMAP

Starting off by building an MVP of the application would be the first potential step. Knocking out the screens and while also figuring out how everything works together (business logic) might be cumbersome but well worth it at the start.

A web launch can be a possible future addition to the roadmap.

OTHER THOUGHTS AND POSSIBLE FEATURES

At the start, the number of users using the app will be fairly limited, and the best way to sidestep this and induce growth is to optionally include all accounts on the pre-existing platform and send them an email if and when they get matched by our users. This viable route will leverage the existing 30,000+ freelancers and generate leads.

I received a generous amount of help from Tinder’s unofficial API to get some sort of sense of what the required fields would be for a profile of this sort. I also went through the various freelancing websites to grab the CategContent enum to help divide and subsequently subdivide groups of users for lower latency during recommendations.

A move away from the Tinder landscape — if a user swipes too fast on a match notify them that they’re only receiving x number of matches a day in a particular stack and they should check out the profile properly for a) finding a hidden gem or something they can connect with the match, and b) improving the recommendation engine. Or, a counter at the top which decrements after every swipe.

POTENTIAL FUTURE REQUIREMENTS/ ADDITIONS

Content Moderation, whether people are using false images (celebrity pictures, copied pictures, no pictures at all) can be achieved with machine learning algorithms in ImageService. Text/ Chat Moderation, keeping the platform clean would be of paramount importance, and employing the use of a database that allows for full-text search would be a great addition to improving the community.

Feb 01, 2021 Update:

Imagine getting called out a few days after you share your thoughts:

Most startups (and big companies) don’t need the tech stack they have.

The overhead in setting up Kafka versus a very hefty Postgres (or BigQuery, or your relational database of choice instance hooked up to a web service receiving JSON sensor data) is enormous, because distributed systems are hard - really hard - and much, much harder than traditional systems.Overarchitecture is real. As Nemil says in this fantastic post,

‘Early in your career, a surprising number of poorly engineered software systems are due to mistakes with engineering media.In college and at bootcamps, your primary exposure to engineering is through engineering media like Hacker News, meetups, conferences, Free Code Camp, and Hacker Noon. Technology that is widely discussed there — say micro services or a frontend framework or the blockchain — then unnecessarily shows up in your technology stack.’

I’ll keep revising my architecture and stack to strike a better balance between convenience and sophistication.